According to WHO, nearly about 1.35 million people die every year just because of road accidents. These accidents occur mainly because of human error. Humans can drive ruthlessly, sometimes they follow rules, and sometimes they don’t. Whenever they violate traffic rules and regulations, mostly road accidents occur. Traffic rules are enacted just to ensure safe driving. Given the other technological advancements, a rising number of traffic accidents also motivated automobile manufacturers to pursue autonomous vehicles. With multidimensional sensing, analysing, determination, and control systems, autonomous vehicles can travel much safer than that of humans. The basic reason lies in the fact that machines don’t get tired. Furthermore, the installation of intelligent systems also makes sure that autonomous vehicles do not violate the rules. Even though there are clear benefits of autonomous vehicles, many people still doubt about the reliability of autonomous vehicles.

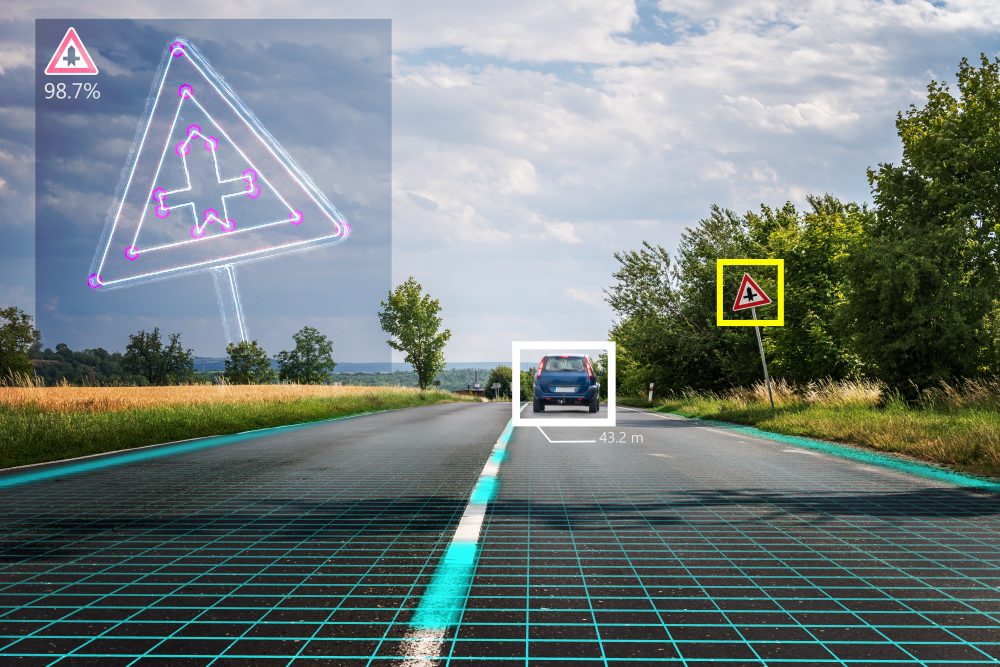

Out of all the grievances about autonomous vehicles, concerns about its recognition system is of great importance. There is a need to shed light on how autonomous vehicle’s recognition system reacts with the multiple road and traffic signs. It is the recognition system that assists autonomous vehicles to act intelligently. Inputs from the camera system and state of the art results of recognition systems are what advocates for the effectiveness and intelligence of autonomous vehicles.

Recognition of Traffic Signals using Camera Input

While the use of radars, LiDARs, GPS, and Ultrasonic sensors in autonomous vehicles assist in the distance and geofencing of the external environment, cameras give the visual perspective of the external environment. The close coordination of all sensing units is the key to the success of autonomous vehicles. Let’s find out how the camera’s inputs are recognized by the autonomous vehicles from the perspective of road and traffic signals.

In the development stage, the perception and interpretation model of autonomous vehicles is trained for various objects and environments, which are expected to occur while travelling in the real world. This information is stored in the matrix for each object. For example, if it is a turn right scene, then different images such as the image of road signs in the sunny weather, cloudy weather, rainy weather, snowy weather, and dark mode are stored in its respective matrix. The same sorting is done for other road signs and traffic signals. While travelling, the camera of the autonomous vehicle constantly captures the visuals of the surroundings. These captured images are then sent to the interpretation model where they are fine-tuned to filter any noise along with fixing resolution related issues. From there, it is passed to the image processing algorithm.

For autonomous vehicles, image processing has three fundamental stages. The first stage is known as pre-processing, where RGB coloured picture is converted to the Hue-Saturation Value (HSV). The second stage is known as detection, where the transcendental colour threshold method is applied for the initial filtering, along with the scanning to establish the Region of Interest (ROI). The third stage is known as the recognition stage, where image processing will be finalized, and therefore, the fate of the captured will be decided. Commonly, Support Vector Machine (SVM) and Histogram of Oriented Gradients (HOG) are employed to recognize the type of captured image, i.e., whether it is a traffic signal or road sign along with the extraction of information. For example, if it is found that image has been recognized as the road sign calling to STOP, then this word will be extracted as the useful information and passed on to the vehicle control system to begin deceleration or braking system.

Challenges

While methodologies about recognition of camera inputs seem substantially accurate, there are many challenges to this highly intelligent algorithm. With the increase in competition and more research studies on the subject of autonomous vehicles, it has been noted that this recognition system has been facing or about to face many challenges. Some of the vulnerable challenges are as follow:

2D Perspective of Cameras

The world around us is in 3D but the camera gives us a 2D perspective. Though there are a lot of 3D cameras that are launched by many companies, still their efficacy is to be evaluated for autonomous vehicles. Since the camera sees 3D objects from the 2D perspective, there is a great challenge of perspective lag. There is a possibility that this lag may miss some information, and therefore, AV’s cameras could not give a full insight into the external environment. Eventually, leading to less effectiveness of autonomous vehicles.

Globally Varying Road Signs Designs

Generally, road signs all over the world are the same, but there are road signs that are either region specified or are shown differently as compared to the rest of the world. For example, in most of the countries STOP sign is mostly written inside a red-painted octagon. But there are countries like Israel, Ethiopia, and Pakistan where a hand is displayed to indicate stop. Similarly, some of the Arab countries write STOP in Arabic. So, in this case, it is a challenge for engineers to develop such a recognition system that shall be able to recognize all sort of road signs as well as different traffic signals.

Effectiveness of AVs Algorithms

The varying road signs further give rise to another challenge, i.e., vast database and the effectiveness of machine learning. The working of autonomous vehicles is chiefly governed by machine learning algorithms. Therefore, it is a big challenge to first formulate such a vast database of road signs along with their respective multi-dimensional matrices. The formulation of such a database does not solve the problem entirely, but it is also a gross challenge to train autonomous vehicle systems to the degree where they can recognize these signs flawlessly in the real world.

Conclusion

To encourage the use of autonomous vehicles, it is very important to educate people about the efficacy and safety of autonomous vehicles. Once a consumer is sure that autonomous vehicle is intelligent enough to decide on its own with the flawless recognition system, only then dream of transforming future mobility with AVs would be realized. There is no doubt in admiring the intelligent recognition model of camera inputs. AVs are capable enough to recognize far-flung road signs and highly elevated road signs with the supersonic processing speed and milliseconds actuation. Since continued learning is the prerequisite of excellence, therefore the same should be applied here. The future of autonomous vehicles is closely associated with confronting the upcoming challenges.