In the past, a lot of automobile manufacturers were working on realizing the concept of autonomous vehicles. But this concept became a hot topic when Tesla’s CEO, Elon Musk, announced a crash program to bring autonomous vehicles on roads. From there, the fierce competition began to roll on. But ground realities suggest that people are still reluctant to buy any such vehicles. There are plenty of studies and articles that show that selling autonomous vehicles is a much bigger challenge than manufacturing them. Even those who lead this project, such as Tesla, is facing many problems in selling AVs to the potential customers. The main reason lies in the reluctance of people to trust their autonomous operations. They are still unable to understand the numerous perceptions that are associated with autonomous vehicles. Let’s have a look at the way, perceptions are made in the AVs, and let’s compare two main algorithms to see how decision making is done.

How are Perceptions made?

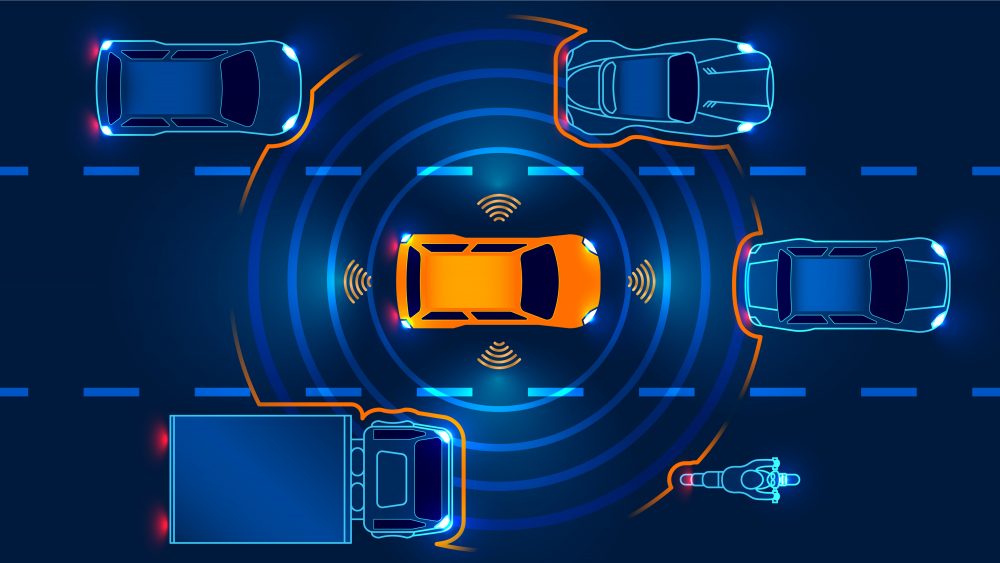

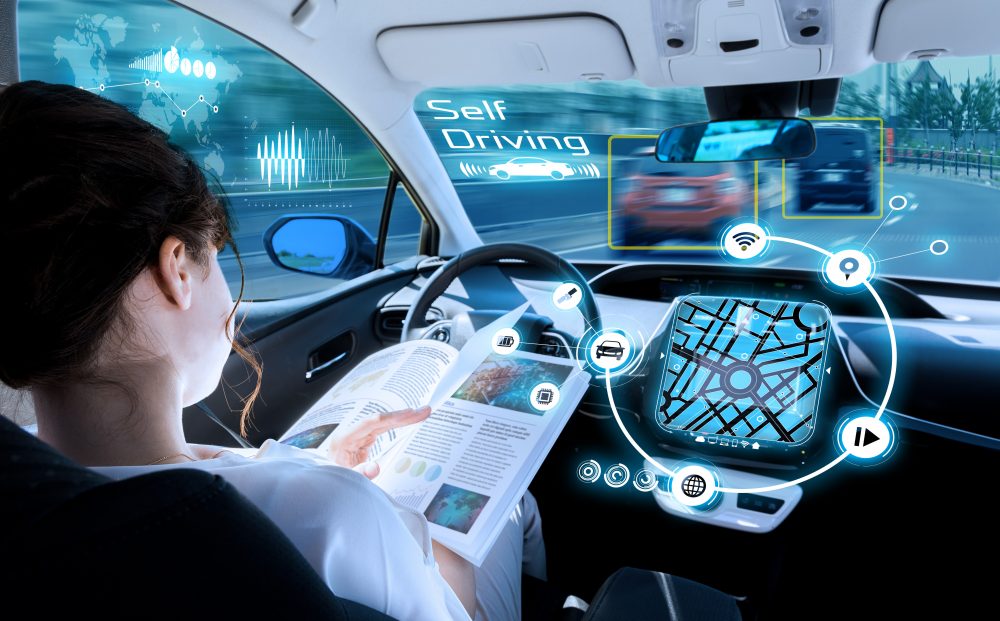

The perceptions in autonomous vehicles are made in the same way as that by humans in conventional cars. Human sensory abilities are replicated in autonomous vehicles with various sensors such as Radars, LiDARs, Ultrasonic sensors, Sonars, GPS, and multiple cameras. These are the sources from which perceptions are made. It must be noted that it is not mandatory that every autonomous vehicle manufacturer will use these sensors. Therefore, it is quite possible that some manufacturers may use some of them or employ some other sensors to handle perceptions. But, generally, the above sensors are some of the most commonly used sources for building perceptions in autonomous vehicles.

The data received from these perceptions are then fed to the interpreter, which then translates this data into the information-oriented language. This interpreted information is then used to make certain decisions and to drive the autonomous vehicle subsequently.

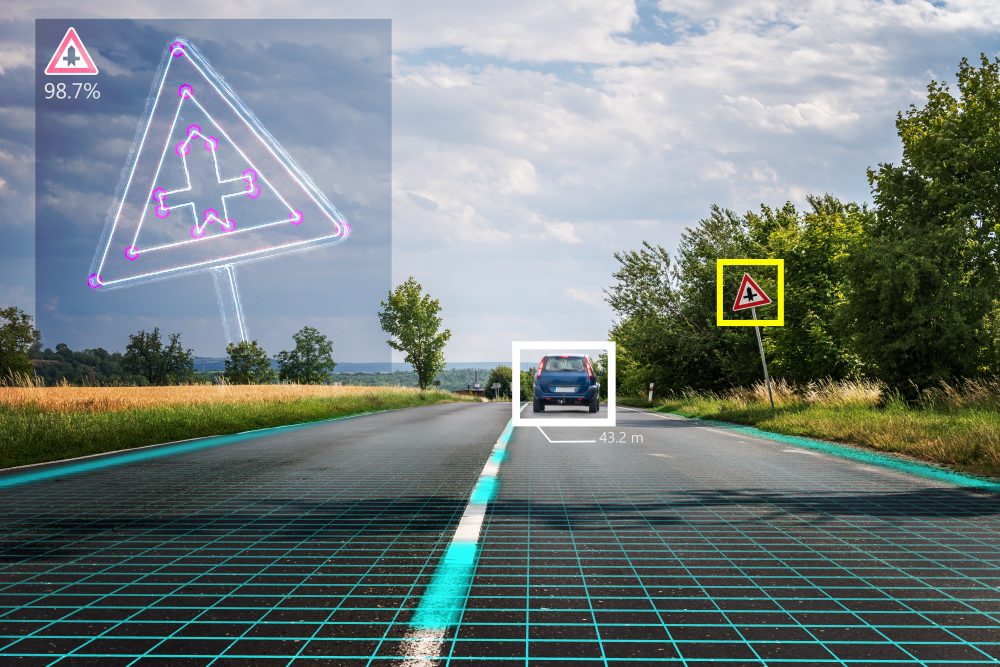

Among all of the sensory equipment, the camera is considered as the most vital part of autonomous vehicles. The reason lies in its being a visual instrument. It has the ability to replicate the human eye and can identify objects in a vision as of humans. But information processing is not just as simple as capturing the image and making decisions. Many intelligent and deep learning techniques are used to fine-tune the visuals obtained from the cameras so as to embed intelligence in autonomous vehicles.

Following are the two key techniques which are used to process and analyse camera visuals in an autonomous vehicle:

Geometrical Analysis

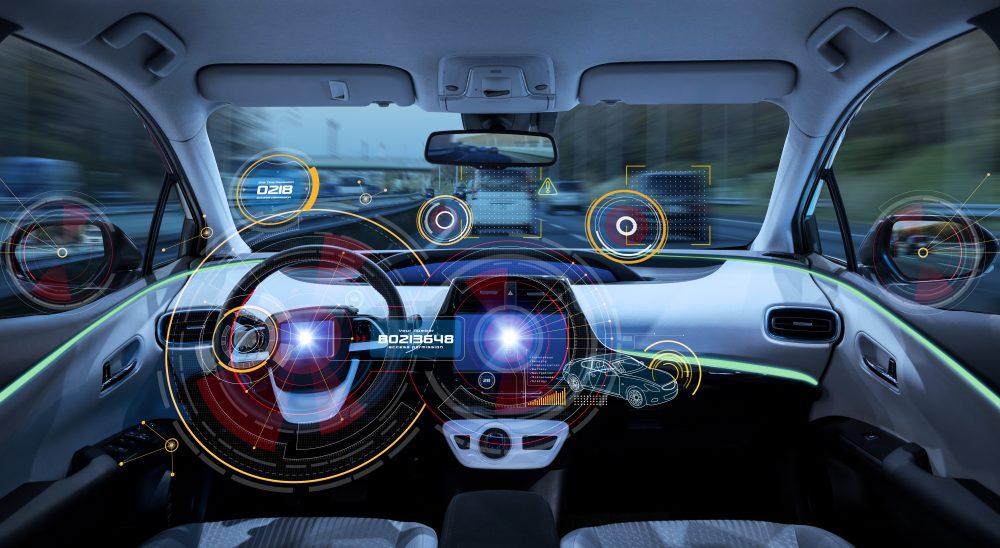

While driving in an urban environment, adherence to the road-lane conditions is mandatory. It ensures safe traveling and encourages fewer road accidents. From the human point of view, such adherence can be ensured by making them forced to follow lane rules. But when it comes to autonomous vehicles, human-less interventions make it difficult to comply with these rules. However, for such compliance, geometrical analysis can prove to be helpful.

With the help of forward-looking cameras, images, and data about the longitudinal markings of the road can be extracted. The successful extraction of such perceptions enables the system to gather information pertaining to the location of the vehicle with respect to the boundary of the road-lane. Furthermore, information about the lateral distances of the vehicle enables the system to determine the right and left boundaries of the road-lane. Thus, enabling the autonomous vehicle to keep in-lane. Such geometric data is important in the locations where GPS signals are either unavailable or disrupted.

Another way to ease the geometrical analysis is to designate the separate lanes for AVs. Since future mobility will be highly reliant on the AVs and many governments are supporting the development of AVs. Therefore, ensuring a separate lane for the AVs or using magnetic strips on the lane boundaries can improve the working of autonomous vehicles.

Neuronal Network

Machine learning is meant for those systems where smartness and autonomy are required. Since the working of the autonomous vehicle is highly dependent on such algorithms, therefore machine learning has great potential here.

Neuronal network or neural network works on the principles of machine learning and tries to replicate the human brain. Here neurons are trained in the same way as humans are trained to learn and make decisions. Data from the perception is fed to the neuronal network, which then trains the datasets and makes necessary decisions as per the fed data.

For example, while commuting to Point B from Point A, an autonomous vehicle stopped at a red traffic signal even when the signal is at the far corner of the road. Such an intelligence operation is done due to the embedding of the neural network. Basically, neurons are trained to look for traffic lights at different height and angle through a camera. The intelligence is embedded when datasets containing images of different traffic signals elevated at different angles, positions, and elevations are gathered and trained. Furthermore, it is also trained for different lights, such as red, yellow, and green light. These neurons or datasets, when gets trained, embeds intelligence into the system. Therefore, whenever a similar situation occurs, they detect, analyse, and act in the same way as humans do. Similarly, road signs, intersecting points, distance from the obstacle or vehicle, and path following are also other key determinates of neural networks in analysing the data from the camera.

Conclusion

The above discussion clearly narrates that the perception model of autonomous vehicles is multi-dimensional. The approach is very novel and is quite close to reality. It fulfils all the pre-requisites to make autonomous vehicles a safer choice. By analysing the adoption of the geometrical or neuronal network, the adoption of the neuronal network seems to be more relevant and effective. The foremost reason lies in the AI and constant learning aspect of the neuronal networks. While it is by-default trained to commute on the roads freely, the continual learning aspect makes it more sustainable. Furthermore, it has the ability to take into account almost every constituent of the perception model. Whether incoming data is from the camera or LiDAR, a neuronal network is effective enough to translate this information and make appropriate decisions.